I have been doing some SEO analysis and critiques for some new clients lately. It is surprising how some mistakes can be so common with websites. Here are 8 things you should be doing to be on top of SEO.

1. Implement Google Analytics – involves putting a few lines of Javascript in a part of the site appearing on every page (footer is recommended) This will really help with  understanding where visitors come from geographically and how they got to your site. It can also integrate with AdWords.

understanding where visitors come from geographically and how they got to your site. It can also integrate with AdWords.

- Ease of implementation – Very Easy.

2. Verify the site with Google Webmaster Tools – You will just need to place a blank .html file in the root of the site. (named a certain way) This will give ranking position data (for Google SERPs). You also really need a sitemap.xml file placed in the root directory. ( .com/sitemap.xml ) This will allow Google to index pages it may not have found due to an excessively vertical site hierarchy or a lower level of trust/authority.

- Ease of implementation – Easy to verify. Depending on CMS, you may need to use a tool to create a proper XML sitemap file – that part may be difficult.

3. Prevent Domain Canonicalization issues – redirect http://sitename.com , http://www.sitename.com/index.php , http://sitename.com/index.php (may be .html , .asp etc.) all to http://www.sitename.com/ or http://sitename.com

Google could see each of these as separate sites and could lead to duplicate content issues and PageRank being diluted across multiple versions. You can also specify which version www or non-www for Google to use in Google Webmaster Tools.

- Ease of implementation – Moderate. Depends on how 301 redirects are handled on this server. Linux based usually use a .htaccess file while Window’s servers generally require a tool such as ISAPI rewrite. Windows is more complicated.

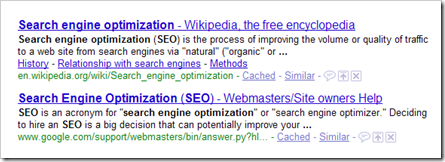

4. Use correct Keyword Targeting. – this has the most facets. You need to define a set of keywords/phrases to target per page (avoiding keyword cannabalization). Then titles and descriptions need to be written for each page. (at least for upper level pages) Every page needs a unique title.

- The title is the most important part and needs to be no longer than 65 characters (including spaces) The most important keywords are at the front of the tag and then at the back. You may always need a brand mention in the title due to credibility being important for CTR.

- Descriptions need to be no longer than 165 characters (including spaces) – The description tag is more important in terms of Click Thru Rate than SEO. (still weave keywords in naturally)

- Ease of implementation – Moderate. Research / writing is just time consuming. Implementation may be harder depending on the CMS.

5. Use SEObook’s RankChecker – to track position in the SERPs by keyword. Need Firefox for this to work. (You can install firefox on a USB drive if need be)

- Ease of implementation – Very easy. (assuming you have Firefox)

6. Linkbuilding – this may be the most difficult but if you can figure out some opportunities for backlink acquisition will greatly help SEO. May involve reaching out to sites, submitting directory listings, local listings, partnering with relevant niche blogs. Good internal linking can also be a good strategy.

- Ease of implementation – Difficult. (time consuming)

7. Use Google AdWords – Identify long-tail keywords and run a global campaign and a local campaign. (if applicable)

This has the most obvious calculation of ROI. You can see how many impressions your ad(s) got and the click-thru-rate and then have a goal set up (like requesting information) – when you see how many people clicked thru or completed a goal you can get a cost of acquisition.

- Ease of implementation – Depends. Easy- if you hire someone that knows what they’re doing. ) Moderately difficult – learn it yourself.

8. Sculpt which pages are indexed – by using no-follow on links to pages you don’t want Google to crawl or index. Also the use of a robots.txt file to exclude those pages from being indexed by robots. Certain pages like “register”, “legal”, “privacy policy” that you don’t want in the SERPs.

- Ease of implementation – Difficult. Putting the rel attribute on links is easy. Making a robots.txt file that is properly formatted is very important, otherwise you could exclude indexing of your whole site.

Bonus:

Use Website Grader Tool to get a quick SEO snapshot. Take with a grain of salt.

(this was modified and depersonalized from an SEO critique I performed for a client)

I would avoid using robots.txt for everything see http://www.seobook.com/robots-txt-vs-rel-nofoll…

We're using google analytics, in monitoring our websites. It's free and easy to use.